This short story originally appeared in Vice.

None of our robots have faces, per se, but it's not hard to tell how they feel. Body language.

Today the medics dragged in one of their own—one of the medical battalion Bears. You know the Bears. Big ol’ things. Fat torso. Tank treads. Curved arms.

We don’t send a lot of human beings into the Engagement Zone, but when we do and one of them gets hurt, it’s a Bear’s job to literally scoop them up and carry them back to the aid station.

The human aid station, I mean.

Well the medics—yes, we call them that even though “mechanic” or even “wrecker” is probably more accurate—came rolling in towing this beat-up Bear and the Bear was actually slumping. Shoulders rounded. Arms dragging. A defeated kind of gesture.

The latest Bears can actually talk, sort of. They’ve got sensors that read human expressions and simple algorithms that cue a range of pre-recorded phrases played via a speaker embedded in the robot’s chest. “It’s going to be okay” is the main one. And dammit if this Bear wasn’t saying that to itself, over and over at low volume, as though reassuring himself. “It’s going to be okay. It’s going to be okay.”

The medics pointed their sensors at me and spoke in their weird, succinct monotone. And in unison, an eerie habit I once reported as a design flaw but that the Army had told me wasn't worth the cost to fix. I mean, I get it. How many millions of bots do the Army own?

“Good evening, major. Bear serial number 805813. Charlie, 1-62 Medical. Unresponsive in the E.Z.”

I dismissed the medics. They turned on their tracks and trundled away, sensor turrets swiveling left and right, compulsively assessing every other robot in the clamshell as they exited, looking for signs of damage, evidence of malfunction, any excuse at all to do the job we’d programmed them to do.

Robots are great like that. Earnest. But I’d tagged all the bots in here with tiny transponders broadcasting coded exclamations of perfect health. Nothing could be farther from the truth. A bot wouldn’t be here if it were healthy. Not as a patient.

A work party of frantic little repair drones swarmed the Bear. One jacking into its external port for a diagnostic. Another scanning shoulder to treads with a 3D laser, looking for damage. A third machine waving a proboscis before the Bear’s own electro-optical sensors, like an optometrist holding up one digit in front of a patient. Follow my finger.

No internal problems. Body a bit scuffed but otherwise fine. Sensor reaction a tad slow for my liking but within normal range. The laser-bot turned to me. “All yours, boss.” I like Laser. He calls me “boss” because I once called myself that in front of him.

Perceptive. By design, I suppose.

Shit, I mean it. Not him. Don’t tell anybody I said that. We can get in big trouble for anthropomorphizing the automatons. “All yours” meant that whatever was wrong with the Bear, it wasn’t something a robot could diagnose or fix.

I’m a combat psychologist for military robots, for lack of a better term. Actually, there is a better term, but the colonel hates it: drone shrink.

I pulled up a folding chair and sat in front of the Bear. Outside, miles away, I could hear the distant sound of explosions. Artillery. Ours or theirs, I couldn’t tell. Probably both. The guns had been busy all day—shooting at what, I didn’t know.

The Bear was listening to the explosions, too, I knew. I also knew that he, unlike me, wasn’t afraid. Not for himself, at least. Although it’s possible he was afraid for me. We program Bears to be very very concerned for a person’s safety but to disregard their own except when our safety depends on theirs. It’s a neat bit of code.

Shit, I’m doing it again. It.

“How are you doing, buddy?” I asked the Bear. But of course he wasn’t programmed to respond to that kind of query.

I used to treat actual human beings, you know. Before the war.

I found the Bear on the wireless network and tapped in my access code on my tablet. His log, like all robot logs, was a long chain of seemingly random numbers and letters.

There were timestamps, coordinates, azimuths and pace counts. Notations of external signals. Brief recaps of algorithmic chains: records of the Bear’s thoughts, essentially. His memories. As I scanned the log for the fourth time, a pattern emerged. The Bear had spent all morning traveling back and forth between one sector of the E.Z. and the aid station.

I had a hunch. I pulled up a map and inspected the Bear’s sector, opened up some spot reports tagged with the same location. Ground zero for the day’s artillery exchanges.

Thirteen killed in action on our side. Twenty-two wounded. Human casualties.

Holy shit. That was the single biggest toll of dead and wounded I'd seen in my two tours of duty. I was surprised the news drones hadn't caught on yet.

Why in the world were so many human beings in the E.Z.? For that matter, why were so many people hanging out in one group? Even the mixed units I was familiar with tended to have just a handful of human soldiers and hundreds of robots.

I dug deeper into the reporting and suddenly it all made sickening sense. A convoy had malfunctioned and taken a wrong turn, driving straight into the E.Z. and the artillery duel—where it promptly broke down.

The trucks were drones, of course. But the passengers were human coders en route to the 1st Cavalry Division headquarters. Coding is the one job that the Army never, ever assigned to machines.

That isn’t just Pentagon policy. Automated coding is actually against the law, not that most people needed a law to tell them to be skeptical of robots teaching robots. To everyday Americans, it’s bad enough that machines teach themselves—a pretty much inevitable function of any truly sophisticated algorithm.

So the Bear was going into the E.Z. to rescue injured coders and retrieve the dead. On his last trip he got halfway to the aid station and just stopped.

“What happened, bud?” I ask the sad bot. He just stared at me. Bears aren’t programmed to be very good patients.

I accessed the Bear’s video archive and searched for the timestamp corresponding with that last, incomplete trip. From the Bear’s point of view, I watched a wiry young man—battered, bleeding and writhing in the Bear’s gentle but firm embrace, flinching with each nearby blast of an artillery shell.

The Bear tried to soothe the man. “It’s going to be okay. It’s going to be okay”

“It's not going to be fucking okay!” the coder screeched. Crack! Boom! Crack!

“You don't understand, you dumb fucking machine! That artillery—I fucking programmed it myself! In grad school!”

Oh the irony. Curse the gods. Moan moan, wail wail. Et cetera.

It happened in an instant, of course. Robots don’t need long to make up their minds. The Bear just halted right where he was and dropped his patient like a sack of shit. The guy wriggled around at the Bear’s treads for a few minutes before another Bear rolled over and scooped him up.

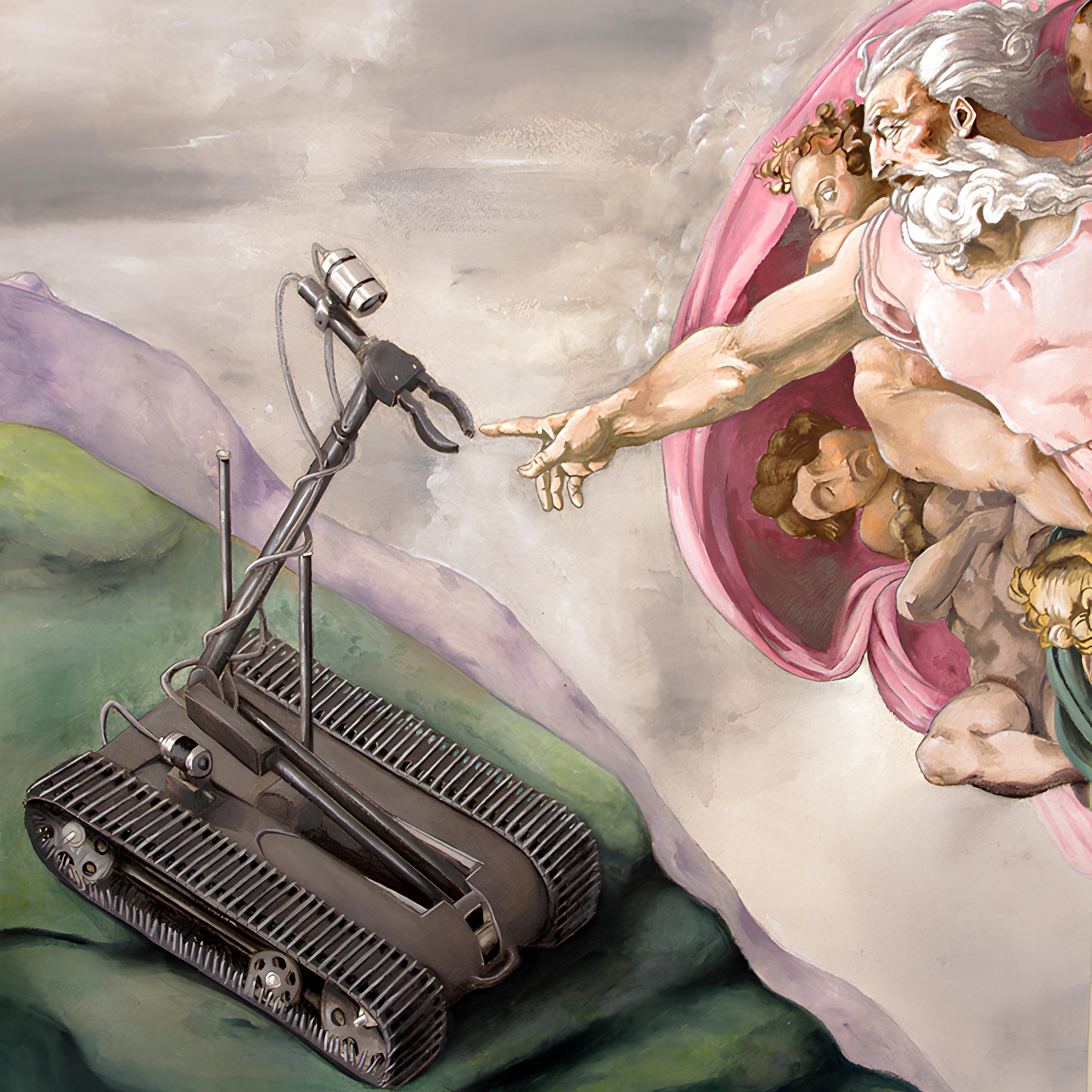

Soon the medics arrived and rigged up the Bear for towing—to me. Of course I recognized immediately what had gone wrong. It was Asimov 101. I’d seen it before.

Look—Bear algorithms are smart enough to assess threats to human beings and guard against those threats. On today’s machine battlefield, the greatest threat to a person is usually a robot. And robots don’t generally appreciate that they’re man-made. They don’t understand that they are, deep in their code, extensions of humanity. So they rarely blame humanity for unleashing smart, deadly machines on the world.

But that stupid, hysterical coder straight-up told the Bear that the same drone artillery that had wounded him and killed his friends was, in fact, his own creation. That the man was a mortal danger to himself.

What’s a Bear to do? Human beings have been trying to untie that paradoxical knot for thousands of years and still aren’t making much progress. A Bear’s got the mind of a very smart dog or a kinda dumb ape. That is to say, robots make terrible philosophers.

Poor, sweet Bear. I didn’t want to put him down, but I was already in trouble with the colonel for sparing that funny little bomb-disposal robot that had abandoned his mission and somehow circumvented his own programming in order to rescue another bot from an ambush.

A robot who felt kinship with his own species. Now that was a machine worth saving.

But a confused Bear wallowing in his newfound realization of humanity’s ultimate self-destructiveness? A nice case study for a freshman programming class, sure. But hardly something for Army R&D, which by now must have thousands of anomalous automatons in cold storage.

So I navigated a series of menus until I reached the FORMAT command, clicked YES when it asked me if I was sure, and let the ticker count down from 10 without pressing CANCEL. Ten, 9, 8, 7, 6 …

“It's going to be okay,” the Bear said. “It’s going to be okay.”

“Sure, bud,” I said. I grasped the Bear’s big, strong, padded hand with my own, and held on until the countdown reached zero.